Last updated: 20th April 2024

In this tutorial I am going to show you how to get the "title" and "meta" description of a live URL in Asp.Net using C# and VB. The process of automatically parsing or extracting information (like title, meta etc.) from the web page, is often known as Screen Scraping. To parse the web page I am using a library called HtmlAgiltyPack in Asp.Net.What is Screen Scraping?

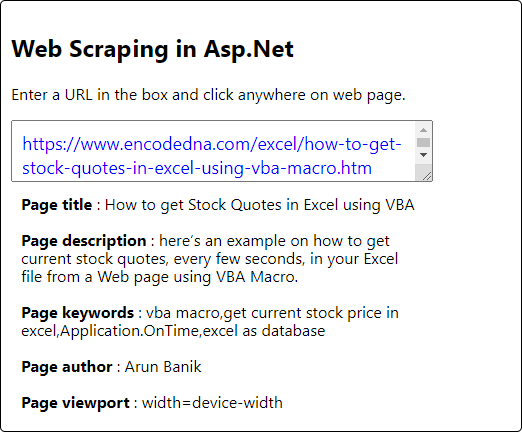

Screen Scraping (similar to web scraping) is not a new concept. It’s a process of extracting a wide range of information from a web page, such as, "meta descriptions", title and other vital details like current stock price etc. See the below image. Facebook has this feature too and it delights its users by extracting not just title and Meta descriptions, but images also.

The below image shows how the code will extract web page info like the "title", meta descriptions like the "keywords", "author of the page" and "page viewport", after you provide a live URL.

What is HtmlAgilityPack?

HtmlAgilityPack is a library (.dll) for .Net that provides necessary methods and properties, using which a developer can conveniently extract any kind of information from a web page. There’s one thing that I found very useful and I thought is worth sharing, is that it can extract data even if the page has bad markup. In HTML, a tag starts with an opening and closing tag. If you have missed the closing tag, it will still extract data of that particular tag.

How Do I Install HtmlAgilityPack?

First, you need the HtmlAgility.Pack.dll library file in your computer.

Like I said, the library has the methods and properties for data extraction. Therefore, if you are using .Net 4 or later, you must have access to Nuget Packages with Visual Studio.

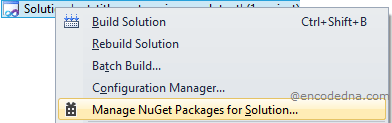

Follow these procedure.

Create a new website using Visual Studio. Open "Solution Explorer", right click solution and click Manage Nuget Packages… option.

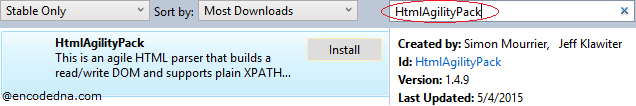

In the Nuget packages window, type HtmlAgilityPack in the search box and click the Install button.

In case, you could not install the library using Nuget package, you can straightway download the library from their website page. You will download a zip file, extract the file and copy the library (version HtmlAgilityPack.1.4.6 – Net20) inside the "bin" folder of your project. If you don’t find bin, create the folder in the root directory of your project.

Well, that’s it. You got the library. Now, let’s code.

In the markup section, I have added few basic controls. I have a textbox control with "AutoPostBack" set to "true". I wish to extract data when I enter the URL in the box. Therefore, I have added the ontextchanged event that will call a code behind procedure "parseWeb".

The extracted data (info) will be displayed in a DIV element.

<!DOCTYPE html>

<html>

<body>

<form runat="server">

<div class="page">

<div class="main">

<div style="line-height:18px; clear:both;">

<div>

<asp:TextBox ID="tbEditor"

placeholder="Enter the URL"

AutoPostBack="true"

ontextchanged="parseWeb"

Width="400px"

Height="40px"

TextMode="MultiLine"

runat="server">

</asp:TextBox>

</div>

<%--show extracted data here--%>

<div id="divPageDescription"

style="width:400px; padding:10px 0;"

runat="server">

</div>

</div>

</div>

</div>

</form>

</body>

</html>Screen Scraping in C# using HTMLAgilityPack

Add the HtmlAgilityPack library in your project, by adding "using" statement.

using HtmlAgilityPack;

Here’s the complete code.

using System; using HtmlAgilityPack; public partial class SiteMaster : System.Web.UI.MasterPage { protected void parseWeb(object sender, EventArgs e) { string url = null; url = tbEditor.Text; HtmlWeb HtmlWEB = new HtmlWeb(); HtmlDocument HtmlDocument = HtmlWEB.Load(url); // First get the title of the web page. var sTitle = HtmlDocument.DocumentNode.SelectNodes("//title"); divPageDescription.InnerHtml = "<b> Page title </b>: " + sTitle["title"].InnerText + "<br />"; // Now, parse <META> tag details. var metaTags = HtmlDocument.DocumentNode.SelectNodes("//meta"); if (metaTags != null) { foreach (var tag in metaTags) { if ((tag.Attributes["name"] != null) & (tag.Attributes["content"] != null)) { divPageDescription.InnerHtml = divPageDescription.InnerHtml + "<br /> " + "<b> Page " + tag.Attributes["name"].Value + " </b>: " + tag.Attributes["content"].Value + "<br />"; } } } } }

Use Import statement to get access to the "HtmlAgilityPack" methods and properties.

Imports HtmlAgilityPack

Option Explicit On

Imports HtmlAgilityPack

Partial Class Site

Inherits System.Web.UI.MasterPage

Protected Sub parseWeb(sender As Object, e As EventArgs)

Dim url As String

url = tbEditor.Text

Dim HtmlWEB As HtmlWeb = New HtmlWeb()

Dim HtmlDocument As HtmlDocument =HtmlWEB.Load(url)

// First get the title of the web page.

Dim sTitle = HtmlDocument.DocumentNode.SelectNodes("//title")

divPageDescription.InnerHtml = "<b> Page title </b>: " & sTitle.Item("title").InnerText & "<br />"

' Now, parse <META> tag details.

Dim metaTags = HtmlDocument.DocumentNode.SelectNodes("//meta")

Dim tag

If Not IsNothing(metaTags) Then

For Each tag In metaTags

If Not IsNothing(tag.Attributes("name")) And Not IsNothing(tag.Attributes("content")) Then

divPageDescription.InnerHtml = divPageDescription.InnerHtml & "<br /> " & _

"<b> Page " & tag.Attributes("name").value & " </b>: " & tag.Attributes("content").value & "<br />"

End If

Next

End If

End Sub

End ClassHope you find this article and example useful for your project. You are now in possession of a library with which you can conveniently parse a web page and extract information for analyzing and other purposes.